Saturday, 27 April 2024

Last week I attended KDE’s joint sprint on accessibility, sustainability and automation hosted at MBition in Berlin. Having had little opportunity to sit down and discuss things since Akademy 2023 and the KF6 release the scope grew even wider in the end, my notes below only cover a small subset of topics.

Sustainability

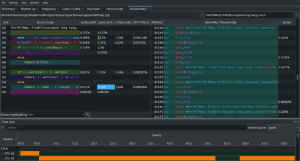

I was mainly involved regarding the energy measurement CI tooling here:

- Resolved some problems with measurement runs getting stuck while running standard usage scenarios.

- Set up VNC remote access to debug and resolve similar issues more easily in the future.

- Identified issues in the measurement data processing and reporting pipeline.

There was also some discussion around “sustainable” or “ethical” “AI”. While its pretty clear that open source implementations are strictly preferable over proprietary ones and that locally runnable systems are strictly preferable to hosted ones things get a lot more muddy when it comes to the licensing of the input data.

This boils down to the question to what extend we consider the output data derivative work of the input data. Besides the current legal interpretation (which I’d expect to be subject to change), this is also about how we see this regarding the spirit of share-alike licenses (xGPL, CC-BY-SA, etc).

Accessibility

Overall the most effort here probably went into learning and understanding the internals of the accessibility infrastructure in Qt, and exploring ways how we could make non-trivial things possible with that, something that should pay off going forward.

But there were also some immediate results:

- Submitted a patch to Qt that should make

interactive

ItemDelegateinstance in lists or combo boxes accessible by default. Those inherit fromAbstractButtonbut have their accessibility role changed which makes them lose the default interaction handling at the moment. - Made the time picker actually accessible and match how similar controls are represented e.g. by Firefox (MR).

- The date picker (while seemingly a very similar control conceptually) proved much harder, but David eventually found a solution for this as well (MR).

Automation

With the AT-SPI accessibility interface also being used for our test automation tooling all of the above also helps here.

- Implemented the missing plural version of finding elements of sub-elements in the AT-SPI Selenium driver (MR).

- Integrated Selenium tests for Itinerary, which then also already found the first bug (MR).

Improvements to monitoring the overall CI status also had some immediate effects:

- K3b, KImageMapEditor and Kirigami Gallery were switched to Qt 6 exclusively, having been identified as already ported.

poxml(an essential part of the documentation localization toolchain) was identified as not having been ported to Qt 6 yet, which meanwhile has been corrected.- Unit test failures in

kcalutilsandmessagelibwere fixed, allowing to enforce passing unit tests in those repositories. - Global CI monitoring also helped to identify gaps in CI seed jobs and pointed us to a Qt 6.7 regression affecting ECM, all of which was subsequently fixed.

Other topics

I also got the chance to look at details of the Akademy 2024 venue with David and Tobias (who are familiar with the building), to improve the existing OSM indoor mapping. Having that data for a venue opens new possibilities for conference companion apps, let’s see what we can get implemented in Kongress until September.

As Nate has reported already we didn’t stay strictly on topic. More discussions I was involved in included:

- Sharing code and components between Itinerary, KTrip and NeoChat. Some initial changes in that general direction have meanwhile been integrated.

- The implications of sunsetting QCA.

- Changes to the release process, product groupings and release frequencies.

You can help!

Getting a bunch of contributors into the same room for a few days is immensely valuable and productive. Being able to do that depends on people or organizations offering suitable venues and organizing such events, as well as on donations to cover the costs of travel and accommodation.

@vkrause:kde.org

@vkrause:kde.org

ngraham

ngraham