Thursday, 3 April 2025

Model/View Drag and Drop in Qt - Part 3

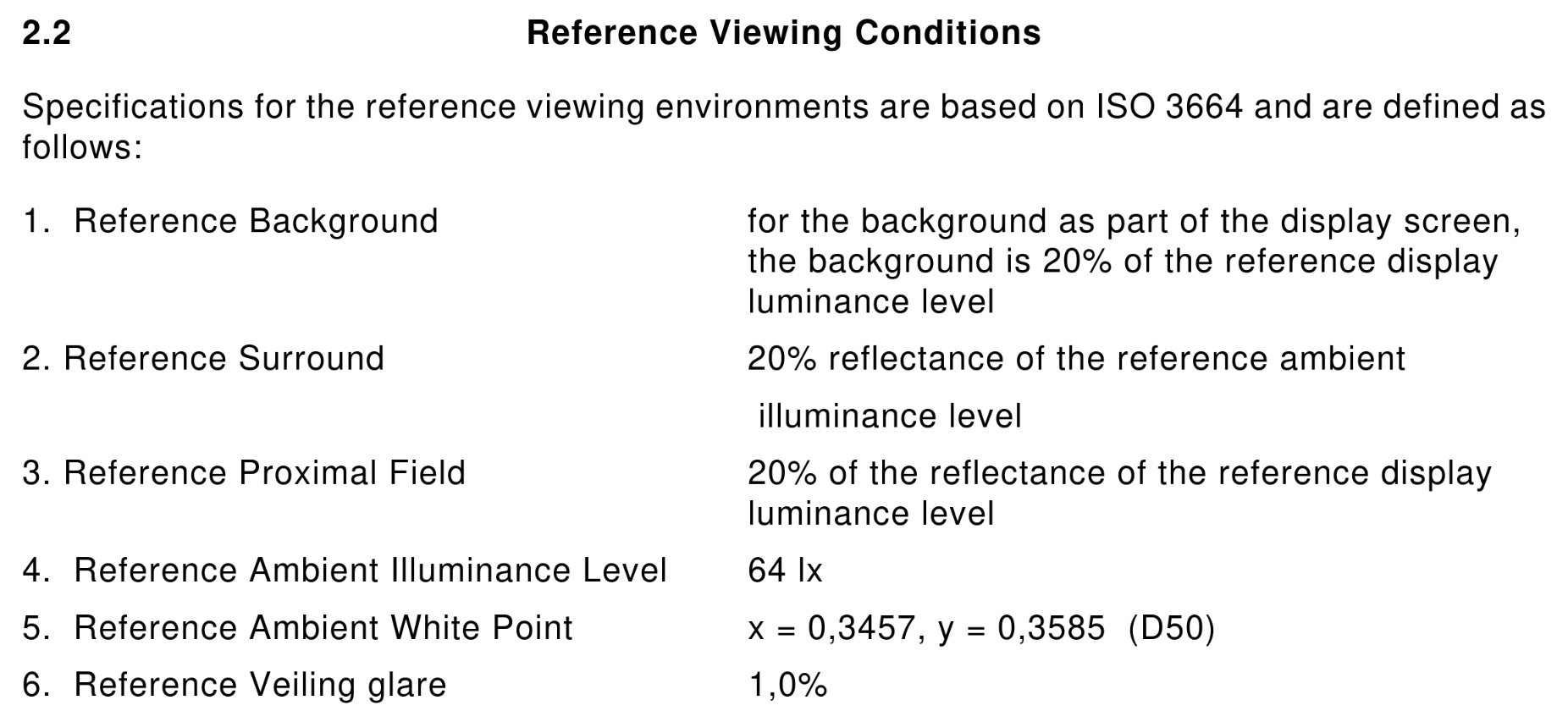

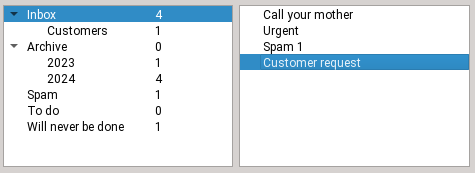

In this third blog post of the Model/View Drag and Drop series (part 1 and part 2), the idea is to implement dropping onto items, rather than in between items. QListWidget and QTableWidget have out of the box support for replacing the value of existing items when doing that, but there aren't many use cases for that. What is much more common is to associate a custom semantic to such a drop. For instance, the examples detailed below show email folders and their contents, and dropping an email onto another folder will move (or copy) the email into that folder.

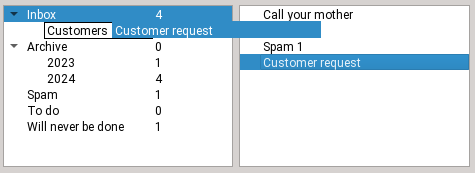

Step 1

Initial state, the email is in the inbox

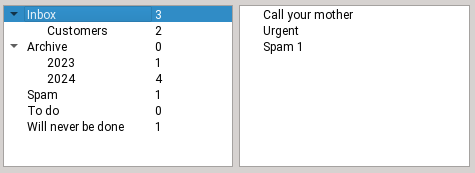

Step 2

Dragging the email onto the Customers folder

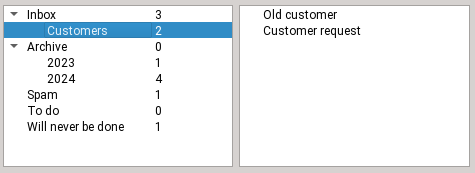

Step 3

Dropping the email

Step 4

The email is now in the customers folder

With Model/View separation

Example code can be found here for flat models and here for tree models.

Setting up the view on the drag side

☑ Call view->setDragDropMode(QAbstractItemView::DragOnly)

unless of course the same view should also support drops. In our example, only emails can be dragged, and only folders allow drops, so the drag and drop sides are distinct.

☑ Call view->setDragDropOverwriteMode(...)true if moving should clear cells, false if moving should remove rows.

Note that the default is true for QTableView and false for QListView and QTreeView. In our example, we want to remove emails that have been moved elsewhere, so false is correct.

☑ Call view->setDefaultDropAction(Qt::MoveAction) so that the drag defaults to a move and not a copy, adjust as needed

Setting up the model on the drag side

To implement dragging items out of a model, you need to implement the following -- this is very similar to the section of the same name in the previous blog post, obviously:

class EmailsModel : public QAbstractTableModel

{

~~~

Qt::ItemFlags flags(const QModelIndex &index) const override

{

if (!index.isValid())

return {};

return Qt::ItemIsEnabled | Qt::ItemIsSelectable | Qt::ItemIsDragEnabled;

}

// the default is "copy only", change it

Qt::DropActions supportedDragActions() const override { return Qt::MoveAction | Qt::CopyAction; }

QMimeData *mimeData(const QModelIndexList &indexes) const override;

bool removeRows(int position, int rows, const QModelIndex &parent) override;☑ Reimplement flags() to add Qt::ItemIsDragEnabled in the case of a valid index

☑ Reimplement supportedDragActions() to return Qt::MoveAction | Qt::CopyAction or whichever you want to support (the default is CopyAction only).

☑ Reimplement mimeData() to serialize the complete data for the dragged items. If the views are always in the same process, you can get away with serializing only node pointers (if you have that) and application PID (to refuse dropping onto another process). See the previous part of this blog series for more details.

☑ Reimplement removeRows(), it will be called after a successful drop with MoveAction. An example implementation looks like this:

bool EmailsModel::removeRows(int position, int rows, const QModelIndex &parent)

{

beginRemoveRows(parent, position, position + rows - 1);

for (int row = 0; row < rows; ++row) {

m_emailFolder->emails.removeAt(position);

}

endRemoveRows();

return true;

}Setting up the view on the drop side

☑ Call view->setDragDropMode(QAbstractItemView::DropOnly) unless of course it supports dragging too. In our example, we can drop onto email folders but we cannot reorganize the folders, so DropOnly is correct.

Setting up the model on the drop side

To implement dropping items into a model's existing items, you need to do the following:

class FoldersModel : public QAbstractTableModel

{

~~~

Qt::ItemFlags flags(const QModelIndex &index) const override

{

CHECK_flags(index);

if (!index.isValid())

return {}; // do not allow dropping between items

if (index.column() > 0)

return Qt::ItemIsEnabled | Qt::ItemIsSelectable; // don't drop on other columns

return Qt::ItemIsEnabled | Qt::ItemIsSelectable | Qt::ItemIsDropEnabled;

}

// the default is "copy only", change it

Qt::DropActions supportedDropActions() const override { return Qt::MoveAction | Qt::CopyAction; }

QStringList mimeTypes() const override { return {QString::fromLatin1(s_emailsMimeType)}; }

bool dropMimeData(const QMimeData *mimeData, Qt::DropAction action, int row, int column, const QModelIndex &parent) override;

};☑ Reimplement flags()

For a valid index (and only in that case), add Qt::ItemIsDropEnabled. As you can see, you can also restrict drops to column 0, which can be more sensible when using QTreeView (the user should drop onto the folder name, not onto the folder size).

☑ Reimplement supportedDropActions() to return Qt::MoveAction | Qt::CopyAction or whichever you want to support (the default is CopyAction only).

☑ Reimplement mimeTypes() - the list should include the MIME type used by the drag model.

☑ Reimplement dropMimeData()

to deserialize the data and handle the drop.

This could mean calling setData() to replace item contents, or anything else that should happen on a drop: in the email example, this is where we copy or move the email into the destination folder. Once you're done, return true, so that the drag side then deletes the dragged rows by calling removeRows() on its model.

bool FoldersModel::dropMimeData(const QMimeData *mimeData, Qt::DropAction action, int row, int column, const QModelIndex &parent)

{

~~~ // safety checks, see full example code

EmailFolder *destFolder = folderForIndex(parent);

const QByteArray encodedData = mimeData->data(s_emailsMimeType);

QDataStream stream(encodedData);

~~~ // code to detect and reject dropping onto the folder currently holding those emails

while (!stream.atEnd()) {

QString email;

stream >> email;

destFolder->emails.append(email);

}

emit dataChanged(parent, parent); // update count

return true; // let the view handle deletion on the source side by calling removeRows there

}Using item widgets

Example code:

On the "drag" side

☑ Call widget->setDragDropMode(QAbstractItemView::DragOnly) or DragDrop if it should support both

☑ Call widget->setDefaultDropAction(Qt::MoveAction) so that the drag defaults to a move and not a copy, adjust as needed

☑ Reimplement Widget::mimeData() to serialize the complete data for the dragged items. If the views are always in the same process, you can get away with serializing only item pointers and application PID (to refuse dropping onto another process). In our email folders example we also serialize the pointer to the source folder (where the emails come from) so that we can detect dropping onto the same folder (which should do nothing).

To serialize pointers in QDataStream, cast them to quintptr, see the example code for details.

On the "drop" side

☑ Call widget->setDragDropMode(QAbstractItemView::DropOnly) or DragDrop if it should support both

☑ Call widget->setDragDropOverwriteMode(true) for a minor improvement: no forbidden cursor when moving the drag between folders. Instead Qt only computes drop positions which are onto items, as we want here.

☑ Reimplement Widget::mimeTypes() and return the same name as the one used on the drag side's mimeData

☑ Reimplement Widget::dropMimeData() (note that the signature is different between QListWidget, QTableWidget and QTreeWidget) This is where you deserialize the data and handle the drop. In the email example, this is where we copy or move the email into the destination folder.

Make sure to do all of the following:

- any necessary behind the scenes work (in our case, moving the actual email)

- updating the UI (creating or deleting items as needed)

This is a case where proper model/view separation is actually much simpler.

Improvements to Qt

While writing and testing these code examples, I improved the following things in Qt, in addition to those listed in the previous blog posts:

- QTBUG-2553 QTreeView with setAutoExpandDelay() collapses items while dragging over it, fixed in Qt 6.8.1

Conclusion

I hope you enjoyed this blog post series and learned a few things.

The post Model/View Drag and Drop in Qt - Part 3 appeared first on KDAB.

ngraham

ngraham

@zamundaaa:kde.org

@zamundaaa:kde.org